Canonical

on 12 April 2023

Charmed Kubeflow is now available on AWS Marketplace

Run an MLOps toolkit within a few clicks on a major public cloud

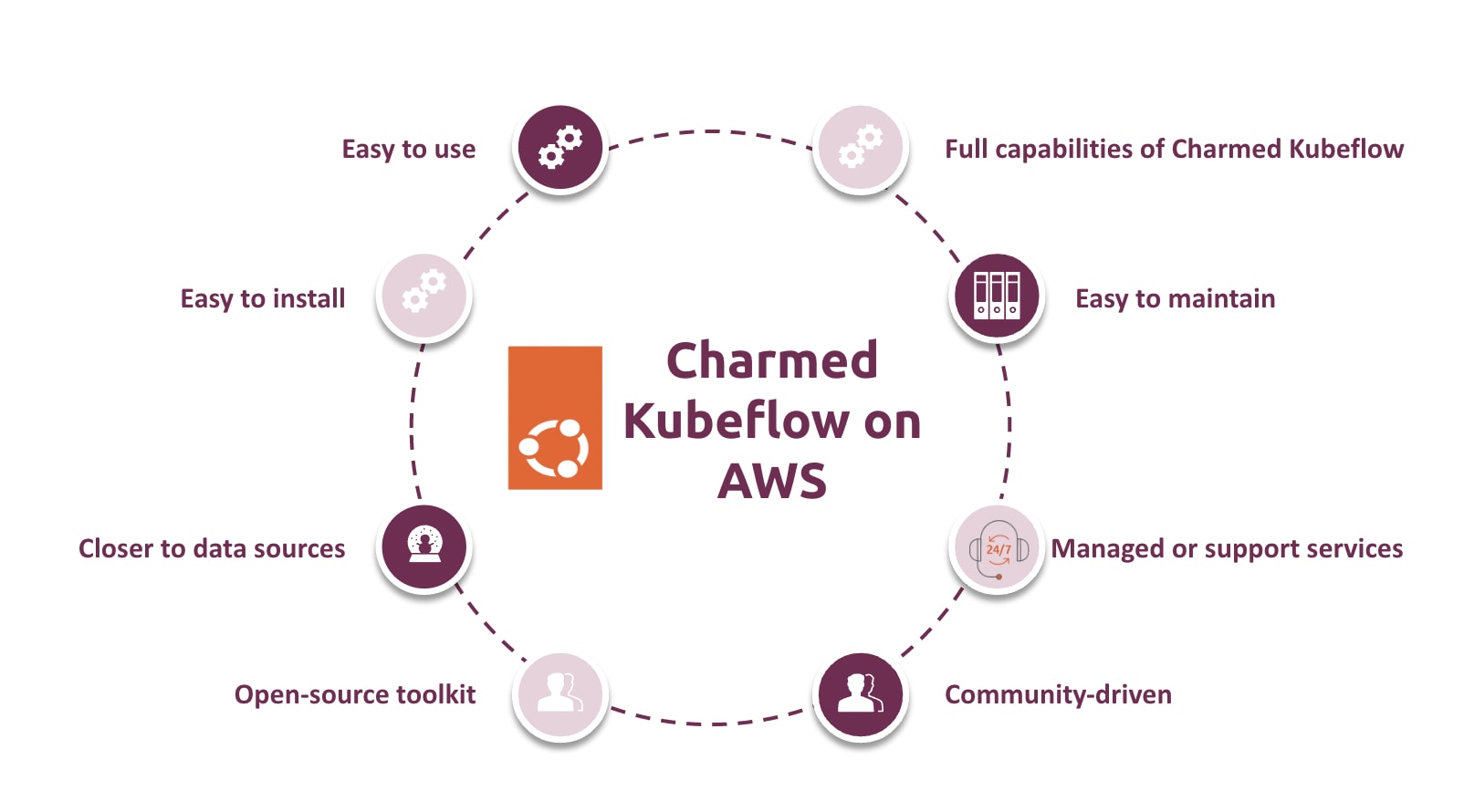

Canonical is proud to announce that Charmed Kubeflow is now available as a software appliance on the Amazon Web Services (AWS) marketplace. With the appliance, users can now launch and manage their machine learning workloads hassle-free using Charmed Kubeflow on AWS. This reduces deployment time and eases operations, providing an easy-to-install MLOps toolkit on the public cloud. It’s ideal for users to get a grasp of the full capabilities of the platform and take machine learning models from experimentation to production in a secured and supported environment.

Quick path to MLOps experimentation on AWS

According to IBM’s Global AI Adoption Index, 35% of organisations reportedly adopted AI in 2022. This figure is expected to increase with generative AI projects such as Chat-GPT or GPT4 accelerating its adoption.

Public clouds are often a preferred method to kick-start AI/ML projects as they offer the computing power to experiment with no constraints. The Charmed Kubeflow appliance is a perfect match for the public cloud, enabling data scientists and machine learning engineers to set up an MLOps environment in minutes and reducing time spent on the design phase. Users can get familiar with the tool before running AI at scale.

Charmed Kubeflow is an open-source, end-to-end MLOps platform that runs on Kubernetes. It automates machine learning workflows to create a reliable application layer for model development, iteration and production deployment. By getting started with an easy-to-install alternative such as the appliance, organisations get the opportunity to get visibility into their workloads, quickly assess possible challenges and better plan their infrastructure expansion, in relation to the projected growth of their AI project. You can try it now!

“The Charmed Kubeflow appliance from Canonical is a true lifesaver for anyone who has struggled with the complexities of setting up Kubeflow manually. As a user who has battled through numerous error messages and spent countless hours debugging Istio and Kubernetes, I can attest to the time and effort that is required. But with this cloud formation template, I simply click a button, wait, and voila – I have a fully-functional Kubeflow instance ready to use. And the best part? The tool is available for free, with users only paying for the AWS services they need. Kudos to Canonical for delivering such a valuable solution.” – Alexey Grigorev, Founder DataTalks.club

Join our joint workshop on 2nd of May to learn how to run MLOps on the public cloud

Register nowDeploy and scale open-source MLOps on AWS

Organisations across industries, from retail to healthcare, are looking for a quick return on investment from their AI projects. They often prefer the public cloud for machine learning workflows to reduce the wait time required to purchase hardware. However, any production-grade AI environment requires support and security maintenance for the infrastructure and application layer. Organisations need to ensure the protection of their models and data, regardless of the cloud used.

The Charmed Kubeflow appliance streamlines the machine learning lifecycle. Users can deploy the models and then serve them to end devices, within one tool. The appliance securely runs machine learning workloads, protecting them from any attacks by performing scanning, patching, upgrades and updates to the latest versions of the libraries. It also monitors the evolution of the model and infrastructure, offering a clear picture of resource usage. For production-grade deployments, organisations can easily move their artefacts from the appliance to a supported deployment on the public cloud or in their own data center.

Charmed Kubeflow is designed to run AI at scale. It supports multiple, concurrent project developments, ensuring reproducibility and portability.

“With the increasing popular awareness of AI, many companies are exploring machine learning for the first time. The Charmed Kubeflow appliance on AWS gives companies a great way to test out machine learning possibilities quickly and easily, but with a clear pathway to a scalable hybrid/multicloud deployment if those pilot projects are successful.” –Aaron Whitehouse, Senior Public Cloud Enablement Director

Open source MLOps on any cloud

According to Forrester, hybrid cloud support for machine learning workloads is a key area of focus for two-thirds of IT decision-makers that have already invested in AI projects. Companies that invested heavily in the public cloud are looking for solutions that are compatible with it to remain cost-efficient and reduce time spent in the pre-processing phase.

Open-source MLOps solutions such as Charmed Kubeflow can run on any cloud. This enables organisations to use spare computing power, if available, on the public cloud, and then expand strategically based on their needs. Charmed Kubeflow provides a wide range of features and capabilities, offering teams the choice to run workloads where it’s convenient and ease of migration between different clouds.

Get started with Charmed Kubeflow on AWS

Data scientists and engineers can get started with Charmed Kubeflow within minutes by visiting the AWS Marketplace. It is a seamless alternative that runs an instance of the product quickly and provides the same capabilities that the traditional deployment has. A fully managed option is available for organisations looking to get support for their MLOPs tooling and enable their team to focus on their AI projects. Learn more about Managed Kubeflow on AWS.

Further reading

- Charmed Kubeflow 1.7 is now available

- [Whitepaper] A guide to MLOps

- [Solution brief] Enterprise AI at scale with NVIDIA and Canonical